Today, Mistral announces Mistral Large 2, the next generation of its flagship model. Compared to its predecessor, Mistral Large 2 is significantly more capable in code generation, mathematics, and reasoning. It also provides much stronger multilingual support and advanced function calling capabilities.

This latest generation continues to push the boundaries of cost efficiency, speed, and performance. Mistral Large is available on la Plateforme, enriched with new features to facilitate building innovative AI applications.

Large 2 Model Capabilities

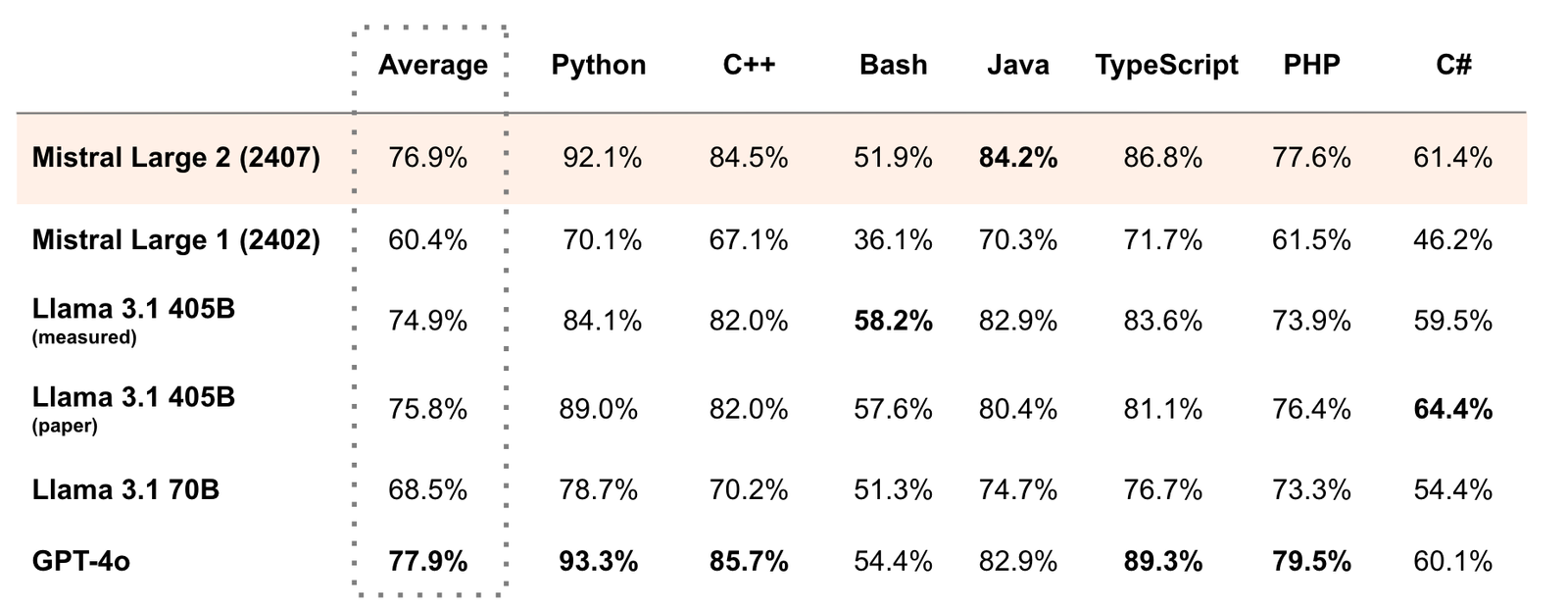

Mistral Large 2 has a 128k context window and supports dozens of languages including French, German, Spanish, Italian, Portuguese, Arabic, Hindi, Russian, Chinese, Japanese, and Korean, along with 80+ coding languages including Python, Java, C, C++, JavaScript, and Bash.

Mistral Large 2 is designed for single-node inference with long-context applications in mind. Its size of 123 billion parameters allows it to run at large throughput on a single node. Mistral Large 2 is released under the Mistral Research License, which allows usage and modification for research and non-commercial purposes. For commercial usage of Mistral Large 2 requiring self-deployment, a Mistral Commercial License must be acquired by contacting Mistral.

General performance

Mistral Large 2 sets a new frontier in terms of performance and cost of serving on evaluation metrics. On MMLU, the pretrained version achieves an accuracy of 84.0%, setting a new benchmark on the performance/cost Pareto front of open models.

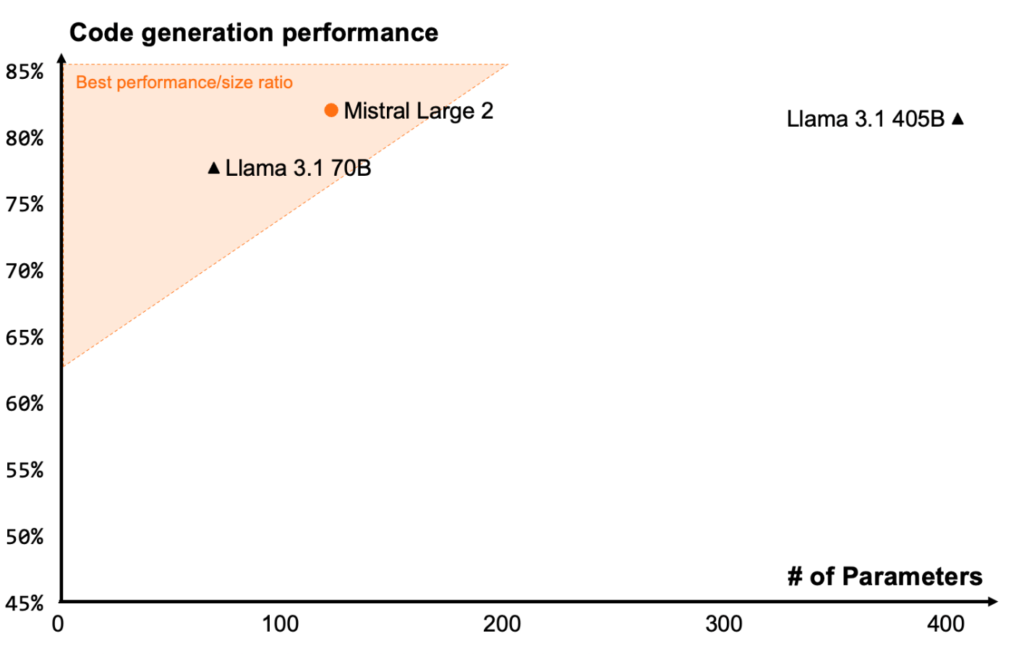

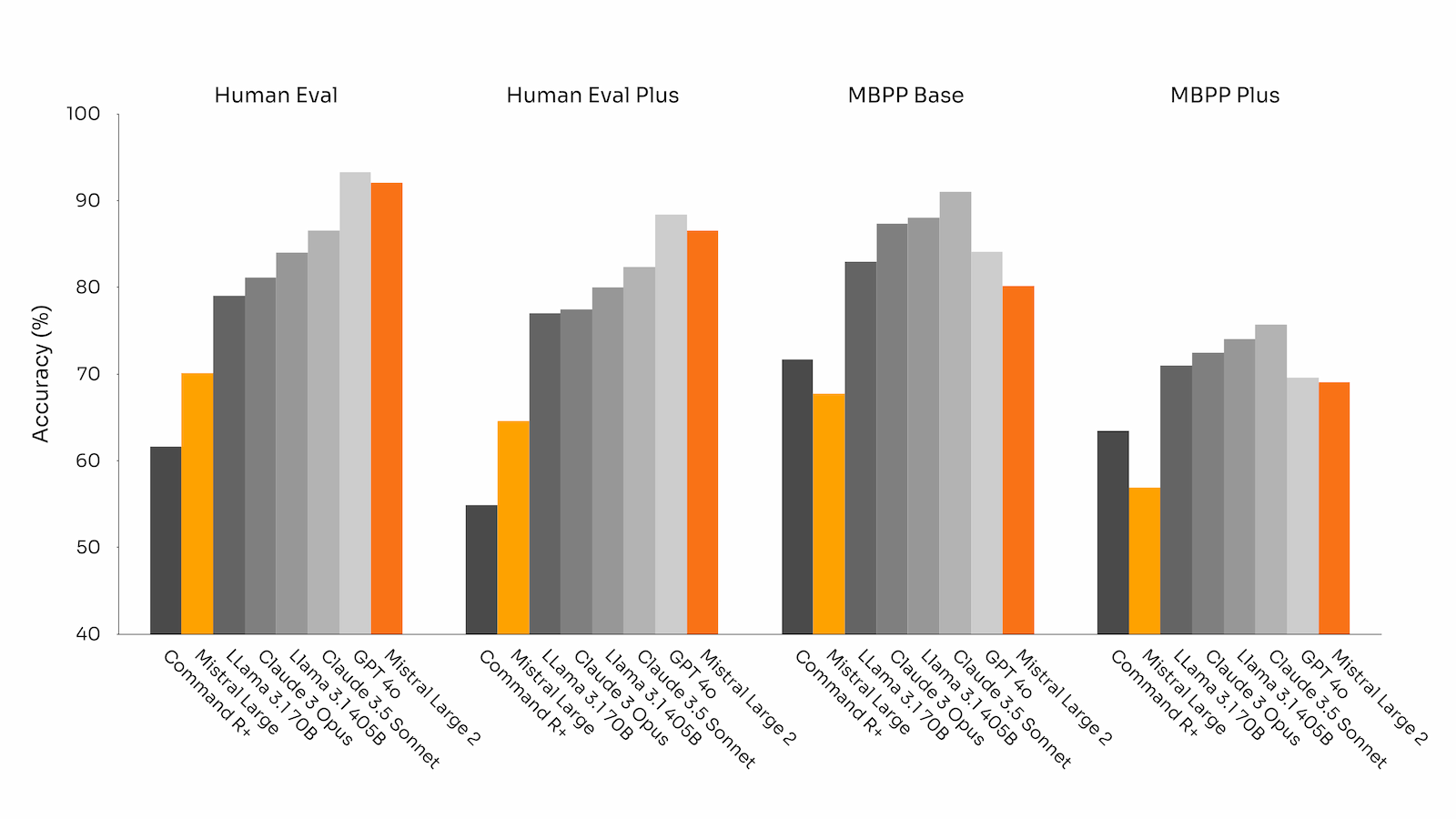

Code & Reasoning

Following experience with Codestral 22B and Codestral Mamba, Mistral trained Mistral Large 2 on a very large proportion of code. Mistral Large 2 vastly outperforms the previous Mistral Large and performs on par with leading models.

This expansion ensures that users across the globe can leverage Mistral Large 2’s capabilities through various platforms, enhancing accessibility and integration into diverse AI workflows.

By partnering with Google Cloud Platform, Azure AI Studio, Amazon Bedrock, and IBM watsonx.ai, Mistral AI aims to provide seamless access to Mistral Large 2, enabling developers and researchers to utilize its advanced features in their projects. These collaborations reflect Mistral’s commitment to delivering high-performance AI solutions that cater to a wide range of applications, from research and development to commercial deployments.

Mistral Large 2’s impressive performance metrics and versatile capabilities make it a powerful tool for various industries, including finance, healthcare, technology, and more. Whether it’s for code generation, multilingual support, or complex problem-solving, Mistral Large 2 stands out as a leading AI model, setting new standards in the field.

With the continuous advancements and partnerships, Mistral AI is poised to drive innovation and excellence in artificial intelligence, making cutting-edge technology more accessible and effective for users worldwide.

Availability

Read related articles: