The Technology Innovation Institute introducing Falcon Mamba 7B. This state-of-the-art language model sets a new AI benchmark with its innovative state space architecture. This groundbreaking release marks a significant stride in AI research.

Falcon Mamba 7B: Key Highlights

Falcon Mamba 7B is the first open-source State Space Language Model (SSLM), introducing a groundbreaking architecture for the Falcon series. This model has achieved the top spot globally as the leading open-source SSLM, a distinction independently confirmed by Hugging Face.

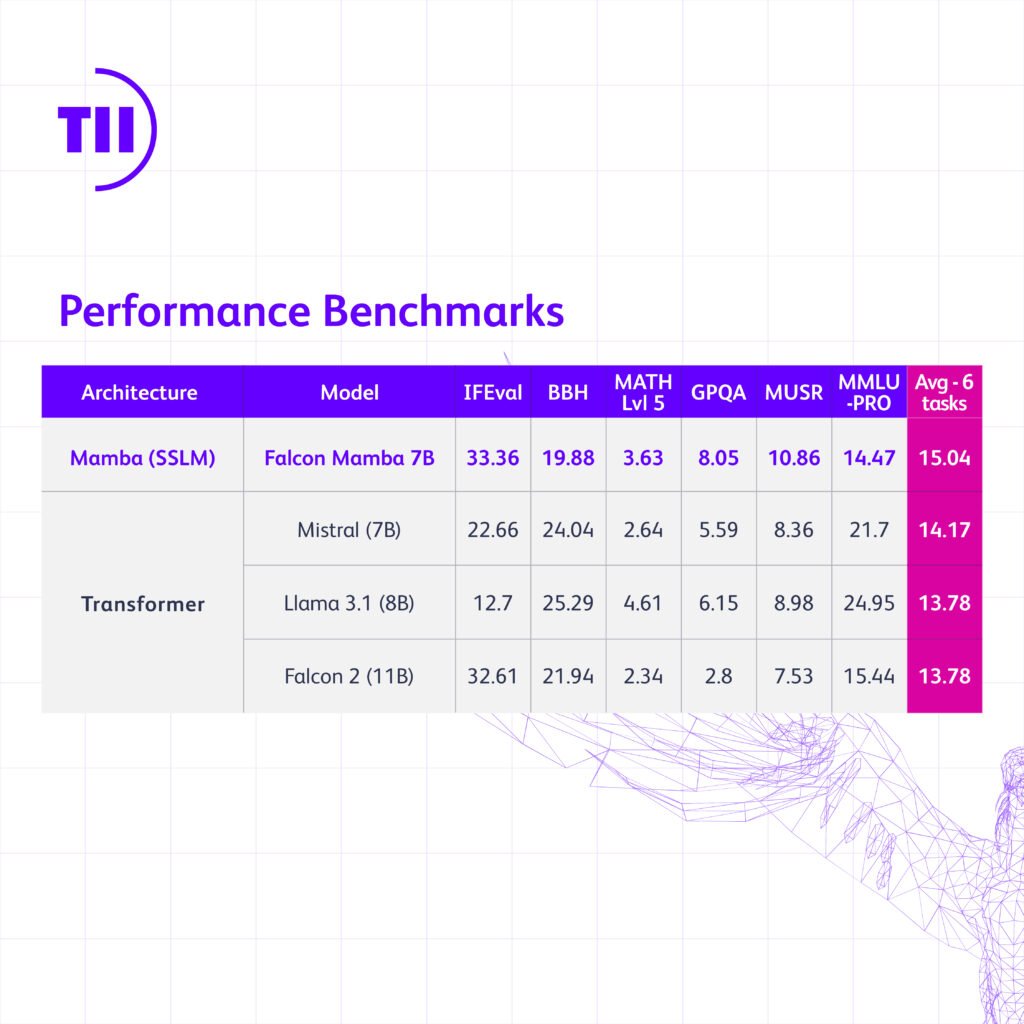

SSLMs are notable for their low memory requirements, allowing them to generate arbitrarily long text sequences without needing additional memory. Falcon Mamba 7B surpasses traditional transformer models like Meta’s Llama 3.1 8B and Mistral’s 7B, reflecting the innovative and pioneering spirit of Abu Dhabi in AI research and development.

What’s New?

Falcon Mamba 7B is our inaugural SSLM, and we’re excited about its capabilities, particularly its ability to process larger text blocks. With its low memory cost, this model efficiently generates long sequences without extra memory demands.

Falcon Mamba 7B surpasses Meta’s Llama 3.1 8B and Mistral’s 7B in the realm of transformer architecture models. When it comes to other SSLMs, Falcon Mamba 7B outshines all other open-source models in previous benchmarks and is poised to lead Hugging Face’s new, more challenging benchmark leaderboard.

How to Use Falcon Mamba 7B

You can access the model on Hugging Face: Falcon Mamba 7B on Hugging Face. We’ve also provided an interactive playground for you to try out the model: Falcon Mamba 7B Playground.

Read related articles: