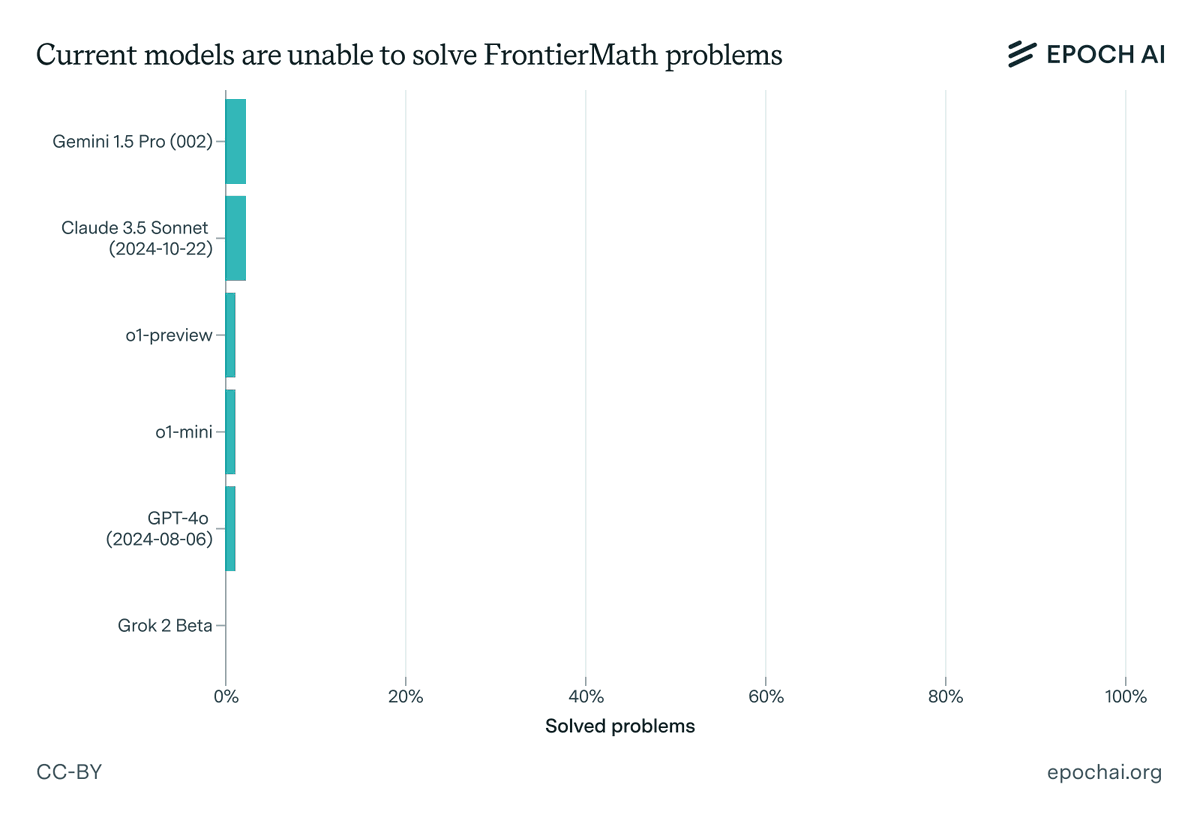

Today EpochAI launching FrontierMath, a benchmark for evaluating advanced mathematical reasoning in AI. They collaborated with 60+ leading mathematicians to create hundreds of original, exceptionally challenging math problems, of which current AI systems solve less than 2%.

Existing math benchmarks like GSM8K and MATH are approaching saturation, with AI models scoring over 90%—partly due to data contamination. FrontierMath significantly raises the bar. Our problems often require hours or even days of effort from expert mathematicians.

We evaluated six leading models, including Claude 3.5 Sonnet, GPT-4o, and Gemini 1.5 Pro. Even with extended thinking time (10,000 tokens), Python access, and the ability to run experiments, success rates remained below 2%—compared to over 90% on traditional benchmarks.

0 Explore FrontierMath: We’ve released sample problems with detailed solutions, expert commentary, and our research paper: https://epochai.org/frontiermath

FrontierMath spans most major branches of modern mathematics—from computationally intensive problems in number theory to abstract questions in algebraic geometry and category theory. Our aim is to capture a snapshot of contemporary mathematics.

Key design principles

FrontierMath has three key design principles:

- All problems are new and unpublished, preventing data contamination,

- Solutions are automatically verifiable, enabling efficient evaluation,

- Problems are “guessproof” with low chance of solving without proper reasoning.

What do experts think? We interviewed Fields Medalists Terence Tao (2006), Timothy Gowers (1998), Richard Borcherds (1998), and IMO coach Evan Chen. They unanimously described our research problems as exceptionally challenging, requiring deep domain expertise.

Mathematics offers a uniquely suitable sandbox for evaluating complex reasoning. It requires creativity and extended chains of precise logic—often involving intricate proofs—that must be meticulously planned and executed, yet allows for objective verification of results.

Beyond mathematics, we think that measuring AI’s aptitude in creative problem-solving and maintaining precise reasoning over many steps may offer insights into progress toward the systematic, innovative thinking needed for scientific research.

You can explore the full methodology, examine sample problems with detailed solutions, and see how current AI systems perform on FrontierMath: https://epoch.ai/frontiermath

Read other articles: