Meta has unveiled the next evolution in object segmentation technology with the launch of the Segment Anything Model 2 (SAM 2). This advanced model significantly enhances the capabilities of its predecessor, supporting real-time, promptable object segmentation in both images and videos. SAM 2 and the associated SA-V dataset are released under open-source licenses, inviting developers and researchers to build their own experiences and applications.

Revolutionizing Object Segmentation

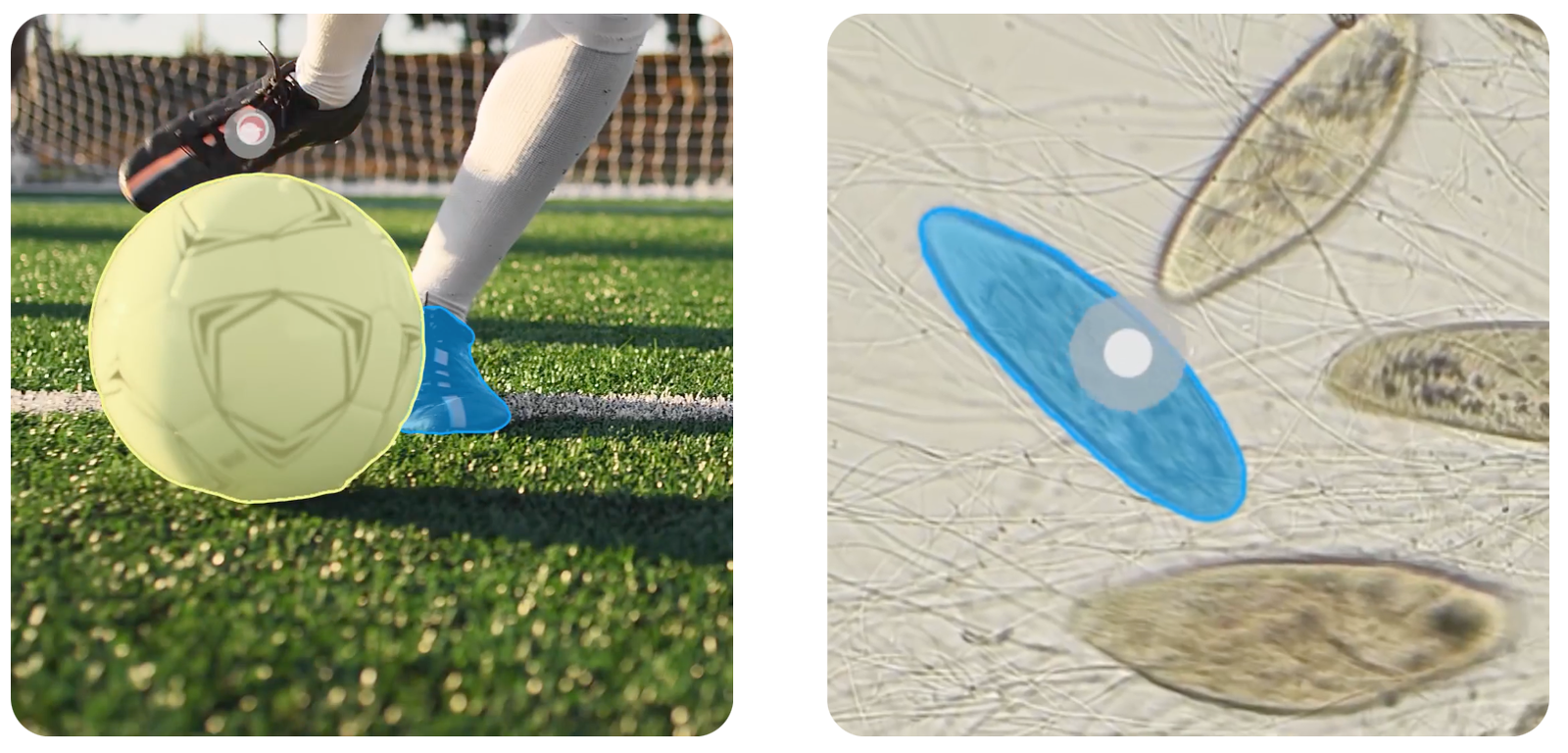

Object segmentation, the process of identifying and isolating objects within an image or video, is a cornerstone of computer vision. SAM 2 excels in this domain, providing unprecedented accuracy in image segmentation and superior performance in video segmentation.

One of its standout features is zero-shot generalization, enabling it to segment objects in previously unseen visual content without requiring custom adaptation. SAM 2 achieves this while demanding three times less interaction time compared to existing models.

Broad Impact Across Disciplines

Since the introduction of the original SAM, Meta’s model has influenced various fields, inspiring new AI-enabled features in Meta’s apps and applications in science, medicine, and beyond. SAM 2 promises to extend this impact further.

It has already been integrated into major data annotation platforms, significantly reducing the time required for human annotation. In marine science, it aids in analyzing coral reefs; in satellite imagery, it supports disaster relief efforts; and in the medical field, it assists in detecting skin cancer.

Open Science and Collaboration

Mark Zuckerberg emphasized the transformative potential of open-source AI in an open letter, highlighting its ability to enhance productivity, creativity, and quality of life. Meta’s commitment to open science is evident in their comprehensive sharing of SAM 2’s research, code, and data.

The SA-V dataset, a substantial compilation with over 51,000 videos and 600,000 masklets, is now available under a CC BY 4.0 license. Additionally, a web demo allows users to interact with SAM 2 in real-time, showcasing its capabilities.

Technical Innovations and Applications

SAM 2’s architecture generalizes the image segmentation capabilities of the original SAM to the video domain. This includes a memory mechanism that allows the model to track objects across video frames, handling complex motions, occlusions, and varying lighting conditions.

The model’s fast inference capability, approximately 44 frames per second, supports real-time applications, making it a valuable tool for content creators, autonomous vehicles, and medical research.

Challenges and Future Directions

While SAM 2 marks a significant advancement, it still faces challenges in handling drastic camera viewpoint changes, long occlusions, and crowded scenes. Meta addresses these limitations by designing the model to be interactive, allowing manual corrections when necessary.

Future improvements aim to further enhance the model’s ability to segment multiple objects simultaneously and improve temporal smoothness in fast-moving scenarios.

Community Engagement and Future Prospects

Meta invites the AI community to explore SAM 2, use the dataset, and engage with the web demo. This collaborative effort is expected to accelerate progress in universal video and image segmentation, paving the way for innovative applications across various industries. SAM 2’s potential extends to everyday use, such as aiding with AR glasses for real-time object identification and interaction.

In summary, SAM 2 represents a significant leap forward in object segmentation technology, offering powerful new tools for researchers, developers, and industries. Meta’s open-source approach and commitment to collaboration promise to unlock exciting possibilities and drive further advancements in the field of computer vision and Gen AI.